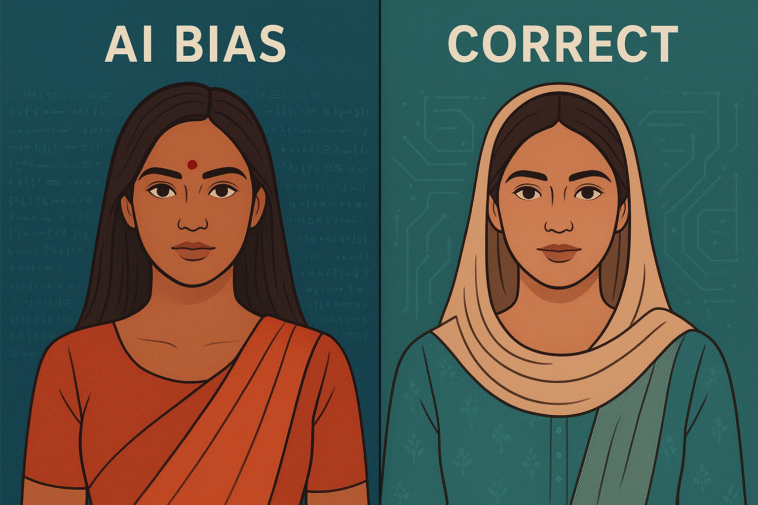

The AI Bindiya Problem:

Why AI Places Bindis on Foreheads and the Cost of Correcting It

Introduction

A curious and persistent phenomenon has emerged in AI-generated imagery: artificial intelligence systems, particularly those used for image and video generation, often incorrectly place a bindiya (a decorative forehead mark, often a stylized bindi or tilak) on the foreheads of people from South Asian communities, even when it is not appropriate or requested. This is not just a quirky error; it is a symptom of deeper issues within AI training and has real-world consequences, including financial waste and cultural misrepresentation.

Part 1: Why Does AI Do This? The Technical & Ethical Roots

The placement of a bindiya is not a conscious choice by the AI but a result of its training data and the patterns it learns. Here are the primary reasons:

1. Biased and Poorly Curated Training Data:

-

The “Pattern Recognition” Problem: AI models like Stable Diffusion, DALL-E, and Midjourney are trained on massive datasets of images scraped from the internet (e.g., LAION-5B). These datasets contain inherent biases.

-

Over-Representation of Stereotypes: The internet is full of stereotypical representations of cultures. For South Asian women, especially in contexts labeled “traditional,” “Indian,” or “wedding,” images often feature bindis and other forehead decorations. The AI learns a strong correlation:

[South Asian face] + [festive/formal context] = [must add bindiya]. -

Under-Representation of Nuance: The datasets lack enough “everyday” images of South Asians without bindis, or they fail to properly label the cultural and religious significance of different types of marks (a bindi vs. a tilak vs. a decorative sticker).

2. The “Prompting” Ambiguity:

-

Vague Requests: A user might prompt for “a beautiful Indian woman at a festival.” The AI, trained on biased data, defaults to the most statistically common representation, which includes a bindiya.

-

Lack of Negative Prompting: Most users don’t know to use negative prompts (e.g.,

"no bindi, no forehead mark"). Without this explicit instruction, the AI reverts to its default, biased patterns.

3. Overfitting and Amplification:

-

Once the AI learns this association, it can over-amplify it. It starts adding bindis to South Asian faces in completely inappropriate contexts—like a CEO in a boardroom, a doctor in a hospital, or a person simply walking down the street—because its core programming is to generate “likely” features based on flawed data.

4. The “Exoticization” Algorithm:

-

Some critics argue that these models are often trained on a Western gaze that views other cultures through an “exotic” lens. The AI learns to add elements it deems “authentic” or “ethnic,” which often translates to stereotypical accessories like bindis, regardless of their relevance.

Part 2: The Financial and Practical Cost of Errors

When an AI generates an incorrect image, the financial cost isn’t just the few cents of compute power. It’s a cascading effect:

1. Wasted Subscription Credits:

-

Most premium AI image generators operate on a credit system. For example:

-

Midjourney: Plans start at ~$10/month for a limited number of GPU minutes.

-

Getty Images Generative AI: Uses a credit pack system.

-

-

The Cost: If a user spends 5 credits generating 20 images to get a perfect headshot and 15 of them have an erroneous bindiya, 75% of those credits are wasted. For a business generating hundreds of images, this waste scales significantly.

2. The Cost of Human Correction:

-

Prompt Engineering Time: An artist or employee must spend extra time crafting intricate negative prompts (

intricate negative prompt: no bindi, no forehead jewelry, no tilak, no red dot...). This is billable time wasted on correcting bias instead of being creative. -

Photo Editing Costs: If the final image is almost perfect except for the bindi, a company must pay a graphic designer to edit it out. This can cost $10 – $50+ per image depending on complexity and the designer’s rate.

3. Project Delays and Missed Deadlines:

-

The iterative process of “generate -> see error -> correct prompt -> generate again” slows down workflows for marketing agencies, filmmakers creating storyboards, and product designers. Time is money.

4. Reputational Cost:

-

Using a stereotyped image in a global marketing campaign can lead to public backlash, accusations of cultural insensitivity, and a loss of trust. The cost of rebuilding a brand’s reputation can be immense and far exceeds the cost of generating the image.

Estimated Total Wasted Spend: While a global figure is impossible to calculate, for any medium-sized business regularly using AI imagery, the combined cost of wasted credits, correction time, and editing could easily amount to thousands of dollars per month.

Part 3: Solutions for Future Renders

Fixing this requires action from both AI developers and users.

For AI Developers & Companies:

-

Improve Training Data Curation: Actively debias datasets. This includes:

-

Balanced Representation: Ingest more images of South Asians in diverse, modern, and professional settings.

-

Better Labeling: Implement detailed, culturally-aware tagging (e.g., distinguishing a religious tilak from a decorative bindi).

-

Ethical Oversight: Employ diverse teams of linguists, anthropologists, and ethicists to audit training data and model outputs.

-

-

Implement “Cultural Safety” Filters: Develop built-in algorithms that detect and prevent the generation of known stereotypical accessories without explicit user prompting. The model should have a “null” default for cultural attributes.

-

Enhance Prompt Understanding: Develop models that better understand the absence of a feature. Improving natural language processing for negative prompts is key.

For Users and Prompt Engineers:

-

Master Negative Prompting: This is the most immediate and powerful tool.

-

Example:

[a professional Indian female lawyer in a courtroom, photorealistic] --no bindi, no forehead mark, no jewelry, no sari, no traditional clothing -

Be excessively specific about what you don’t want.

-

-

Use In-Painting and Out-Painting: If the image is 99% correct, use tools like DALL-E’s editor or Photoshop’s Generative Fill to remove the bindiya and have the AI regenerate just that small section.

-

Choose the Right Model: Some AI models are better than others at handling cultural specificity. Experiment to find which one produces the least biased results for your needs.

-

Provide Detailed Feedback: Most AI platforms have a “report” or thumbs-down button. Use it. Reporting these errors helps developers fine-tune their models.

Conclusion: Beyond the Bindiya

The erroneous bindiya is a small, visible symptom of a much larger disease: AI bias. It demonstrates how the prejudices and oversimplifications of our world are being baked into the technologies of our future. Addressing it isn’t just about saving a few dollars on wasted credits; it’s about building AI that sees humanity in its full, diverse, and nuanced glory, rather than reducing it to a set of stereotypical patterns. The solution requires a concerted effort from engineers, ethicists, and users to demand and create better, more representative systems.